Build Your Security Data Lake in Hours

Connect dozens of log sources with zero custom code. Transform and enrich your data during ingestion. Store everything in your own S3 buckets with complete data ownership.

30+

< 5 min

~$0.02 / GB

100%

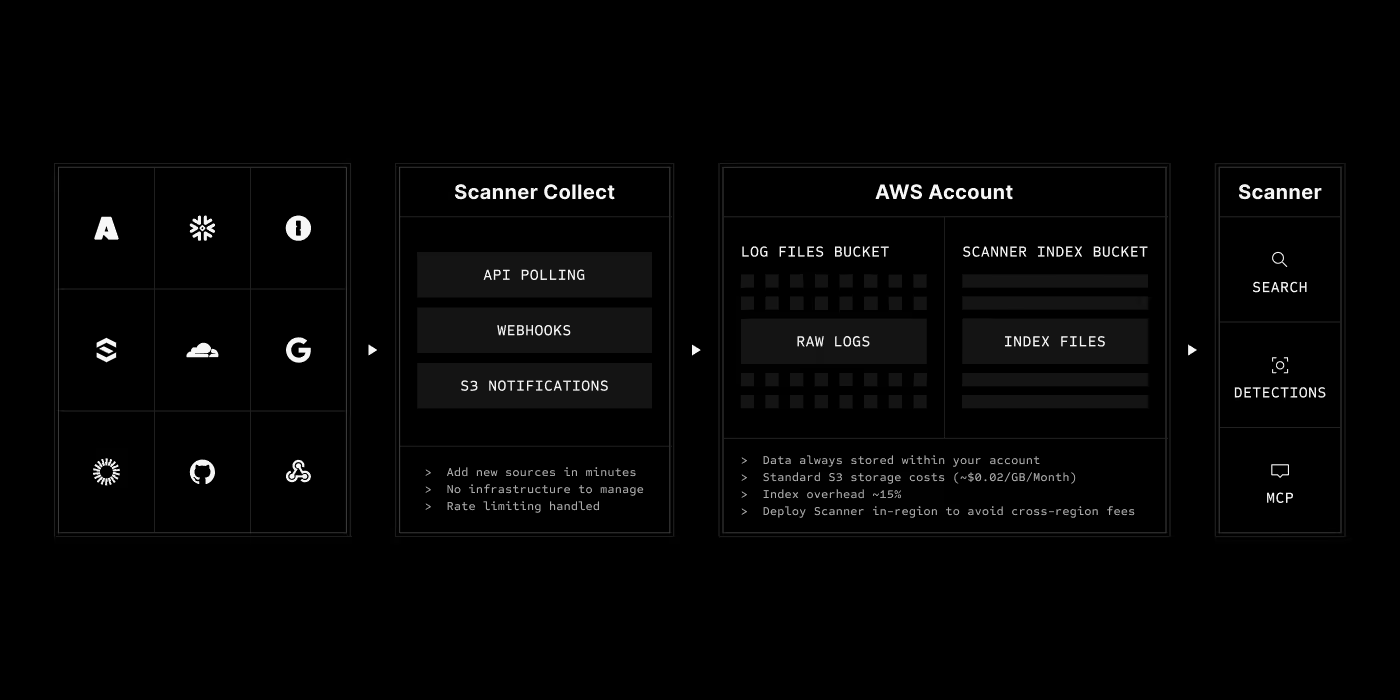

How Scanner Collect Works

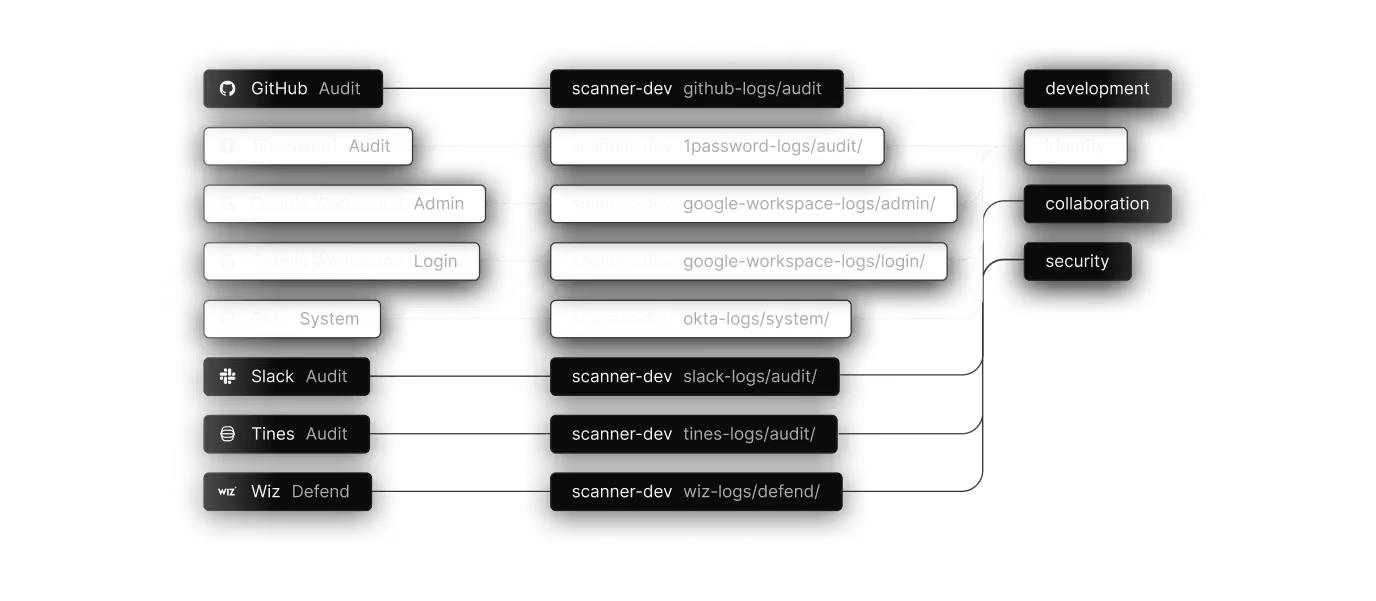

Connect your log sources

Choose from 30+ pre-built integrations covering SaaS applications, cloud platforms, and security tools. No scripts to write, no agents to deploy, no API tokens to manage manually.

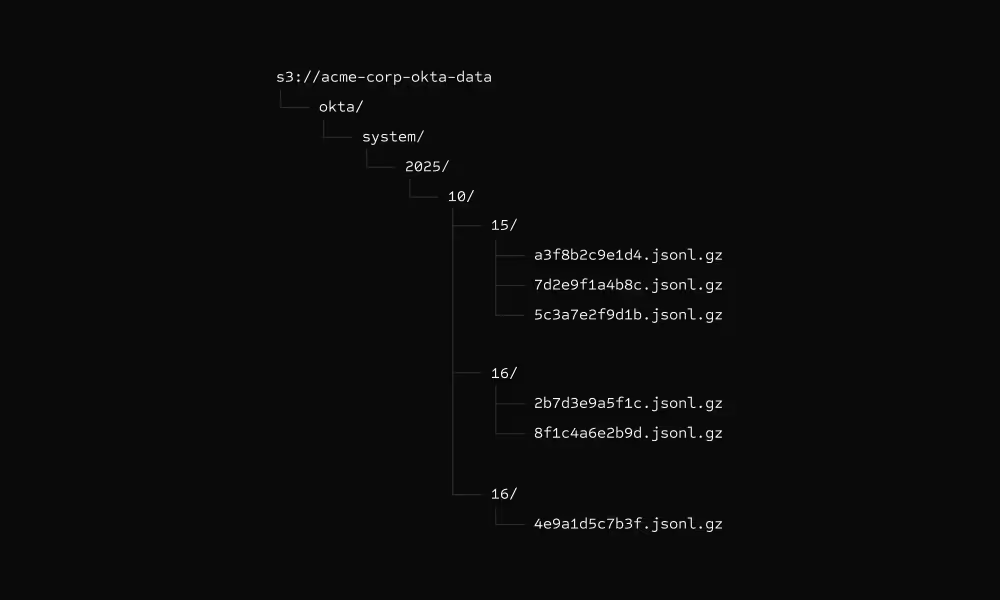

Logs delivered to your S3

All logs are written directly to your S3 buckets as gzipped JSON files. You maintain complete data custody - your data never leaves your AWS account.

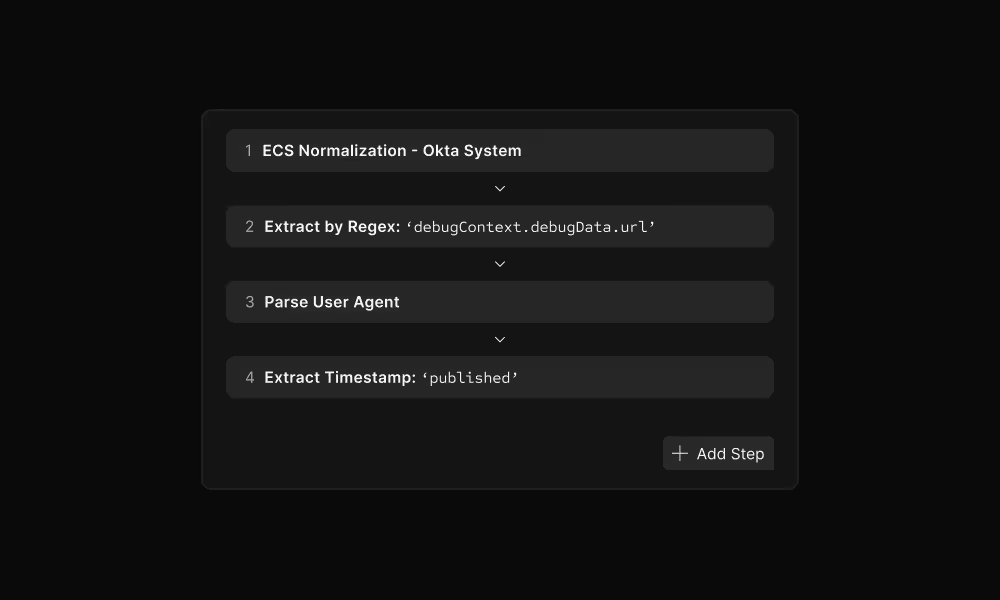

Transform your logs

Parse and normalize logs during ingestion using VRL (Vector Remap Language). Extract fields, handle timestamps, and structure unstructured data - all before indexing.

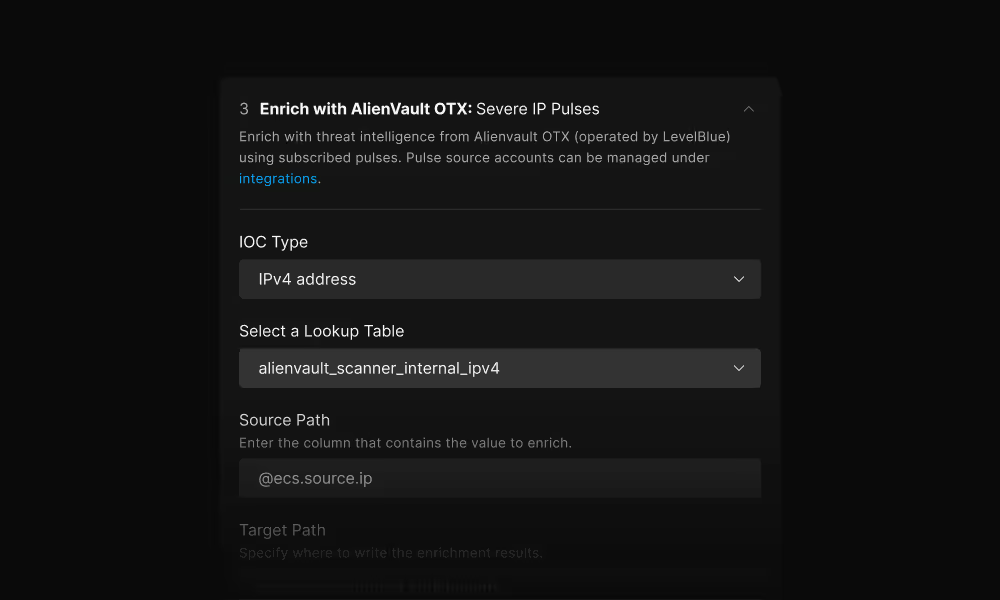

Enrich with context

Add organizational and threat intelligence context during ingestion. All enrichment happens at indexing time, making the context immediately searchable for investigations and detections.

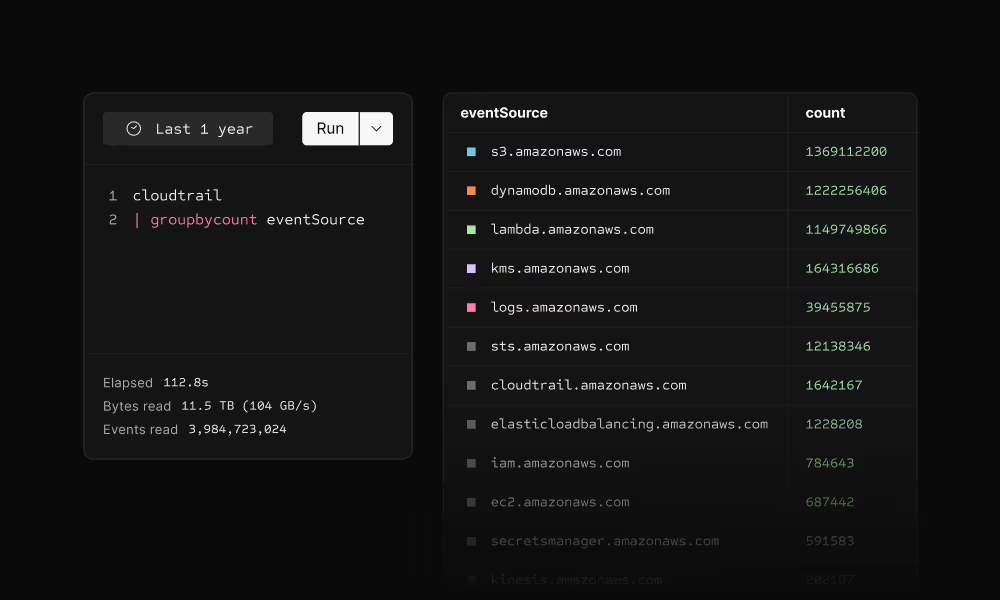

Indexed & immediately searchable

Scanner builds compact index files alongside your logs, enabling full-text search across petabytes in seconds. Original logs stay untouched in S3. Index files use ~15% of storage overhead and remain in your buckets.

Data lake architecture

Your logs stay in your S3 buckets. Scanner adds lightweight indexes for instant search. No data leaves your environment.

Pre-built integrations

Connect your entire security stack in an afternoon. More sources added regularly based on customer demand.

Scanner Collect vs. building it yourself

Compare Scanner to custom log pipelines and traditional SIEMs.

FAQ

Building a log collection pipeline requires writing code to handle API pagination, rate limiting, authentication, retries, and monitoring for each source. Then you need to maintain it as APIs change. Scanner Collect provides 30+ pre-built integrations that handle all of this automatically, getting you from zero to collecting logs in under 5 minutes instead of weeks of engineering time.

All logs are stored in your own S3 buckets in your AWS account. Scanner never takes custody of your data. You can deploy Scanner in the same region as your buckets to avoid cross-region costs and meet data residency requirements.

Yes! If you're already delivering logs to S3 (via CloudTrail, third-party tools, or custom pipelines), Scanner can index them in place using S3 event notifications. Supports JSON, Parquet, CSV, and plaintext formats.

Your logs stay in your S3 buckets as standard gzipped JSON files. You can continue using them with any tool. No vendor lock-in. The index files are also in your buckets - you can delete them if you no longer need Scanner's search capabilities.

Transformations and enrichment happen during ingestion, so they only apply to new logs. Historical logs are not retroactively modified. If you need to re-process historical data with new transformations, you can trigger a re-indexing job.

Absolutely. Since logs are in your S3 buckets as standard JSON files, you can set up S3 event notifications to trigger Lambda functions, stream to Kinesis, or use any ETL tool to forward data wherever you need. Scanner doesn't lock you into any specific workflow.

Start Building your Security Data Lake

See how Scanner Collect can help you consolidate all your security logs, transform them with rich context, and make them instantly searchable - all in an afternoon.