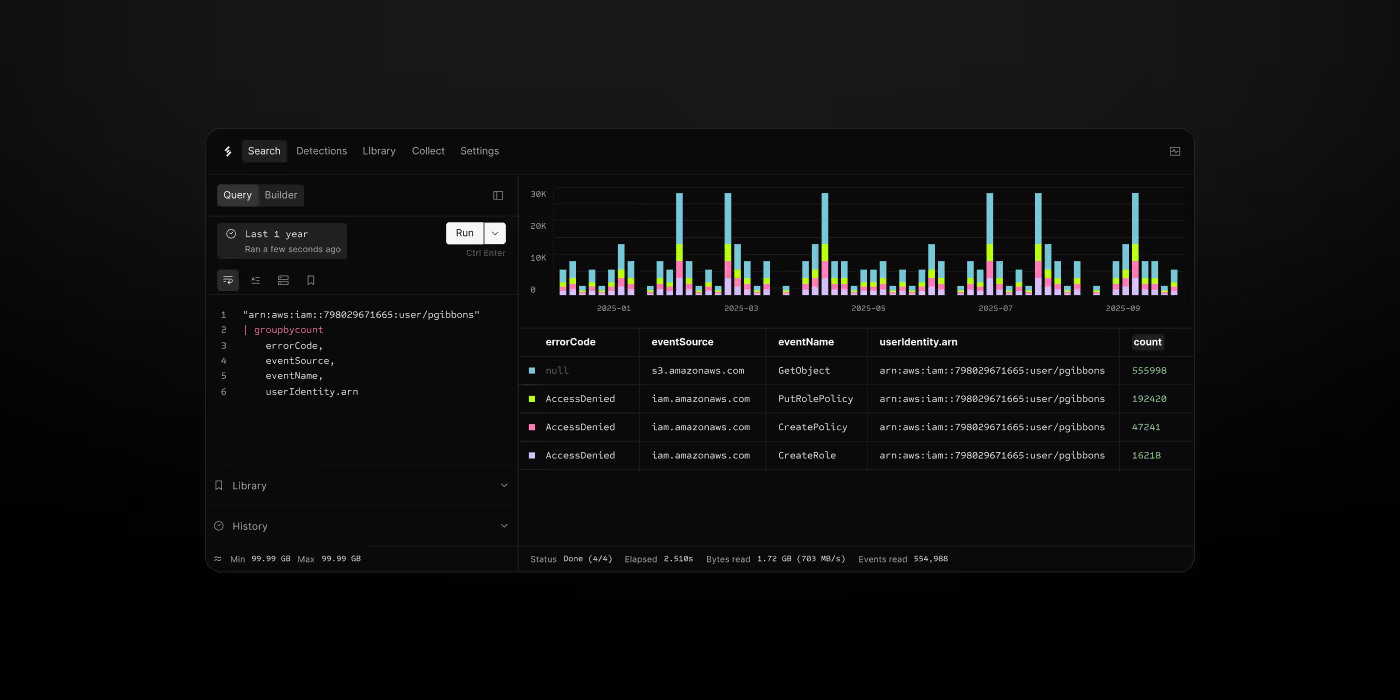

Search Petabytes in Seconds

Full-text search across years of security logs in seconds, not hours. Inverted indexes and serverless execution make iterative investigation actually possible.

<10s

100x

1–10s

$0.01—$0.10

Traditional Data Lakes Are Too Slow for Security

When queries take 30+ minutes, investigation becomes impossible. You can't iterate, can't pivot, can't pursue multiple hypotheses.

Problem: Full Scans

Traditional tools (Athena, Presto) scan entire tables even for simple queries. Searching for a specific IP or API key means reading and parsing every log file.

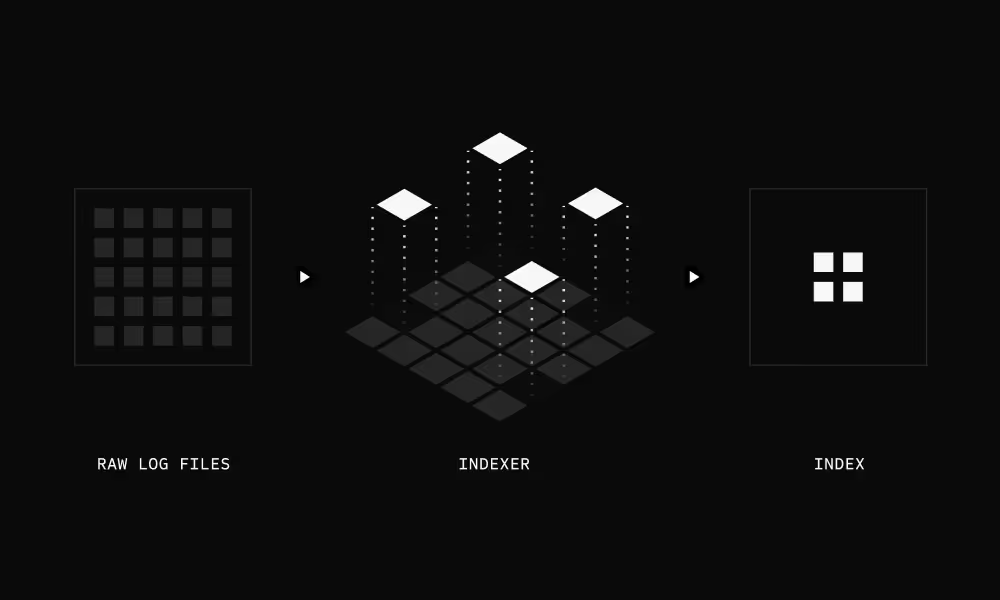

Solution: Inverted Indexes

Scanner builds indexes at ingestion time. Queries look up which files contain matching data, then scan only those files. Skip everything else.

How Scanner Search Works

Indexes built when logs arrive in S3

When logs arrive in S3, Scanner parses them once and builds an inverted index: a lookup table mapping every field value to the files containing it. Index files are stored alongside your logs in S3.

Queries find relevant data instantly

When you search, Scanner reads the index files (not the original log files). It looks up each search term, gets the index segment lists, and finds the intersection—segments that match all your conditions. Only those segments get scanned.

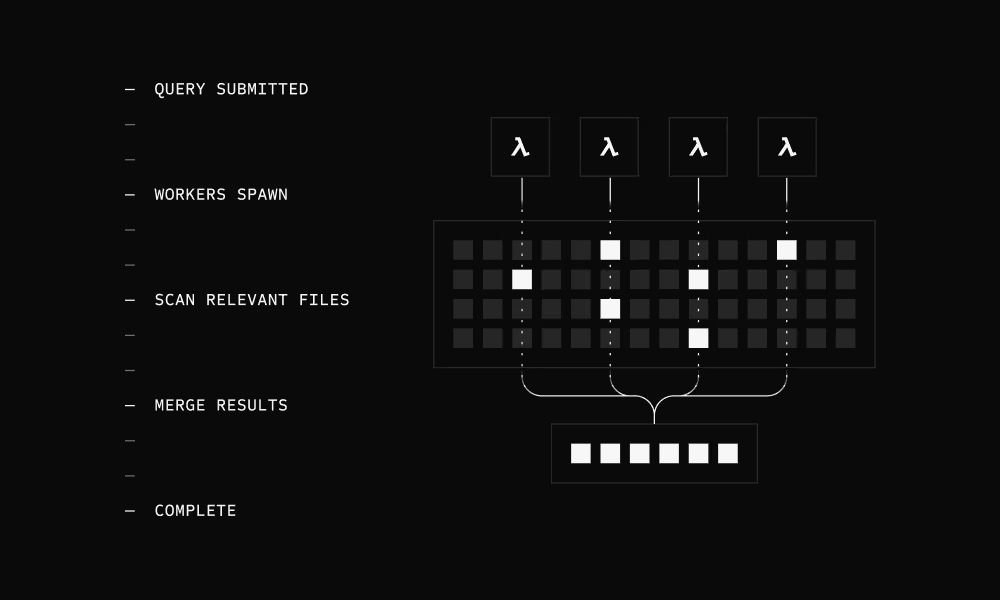

Parallel serverless execution

Lambda workers spawn automatically - analyzing index files in parallel. They identify matching log segments in parallel, scan only relevant data, and merge results. Functions terminate immediately after. You only pay for seconds of actual compute.

Speed changes what's possible

Investigation is iterative. Every answer leads to more questions. Fast queries mean you can actually follow every lead. Traditional data lake tools like Athena and Presto are too slow for this workflow.

3 queries in 2 hours

Suspicious API key accessing S3 buckets from unknown IP address.

Query 1:

When did this key first appear?

45 minutes

Query 2:

What other buckets has it accessed?

38 minutes

Query 3:

Any related suspicious activity?

52 minutes

Total: 2 hours, 15 minutes

Investigation has barely started. Window for containment is closing.

20 queries in 4 minutes

But you can pivot immediately on every finding.

Query 1:

When did this key first appear?

8 minutes

Query 2:

What other buckets has it accessed?

5 seconds

Query 3:

Any related suspicious activity?

12 seconds

Query 4-20

Who created the key? When? From where? What else did they do? Which resources are affected? Any lateral movement?

3 minutes combined

Total: 4 minutes

Root cause identified: compromised CI/CD pipeline. All affected resources mapped. Systems isolated.

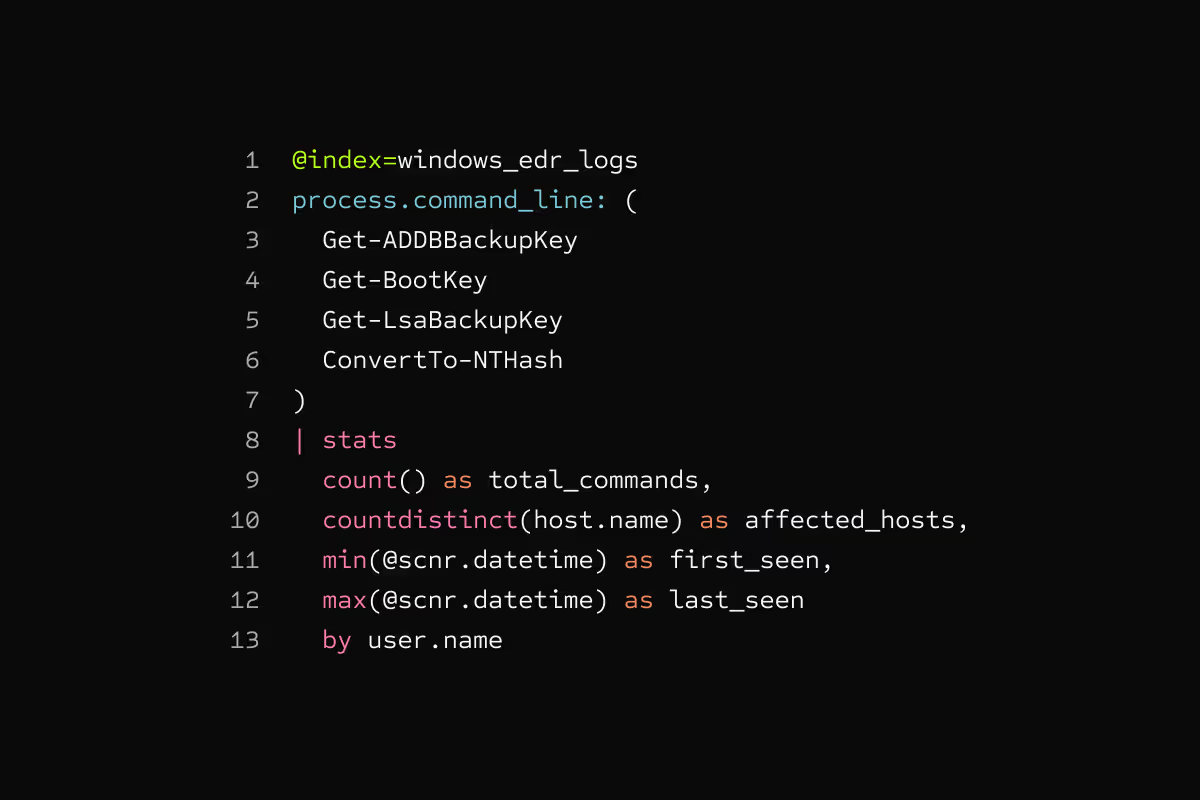

Built for security investigations

Fast queries are just the start. Scanner is designed for how security teams actually work.

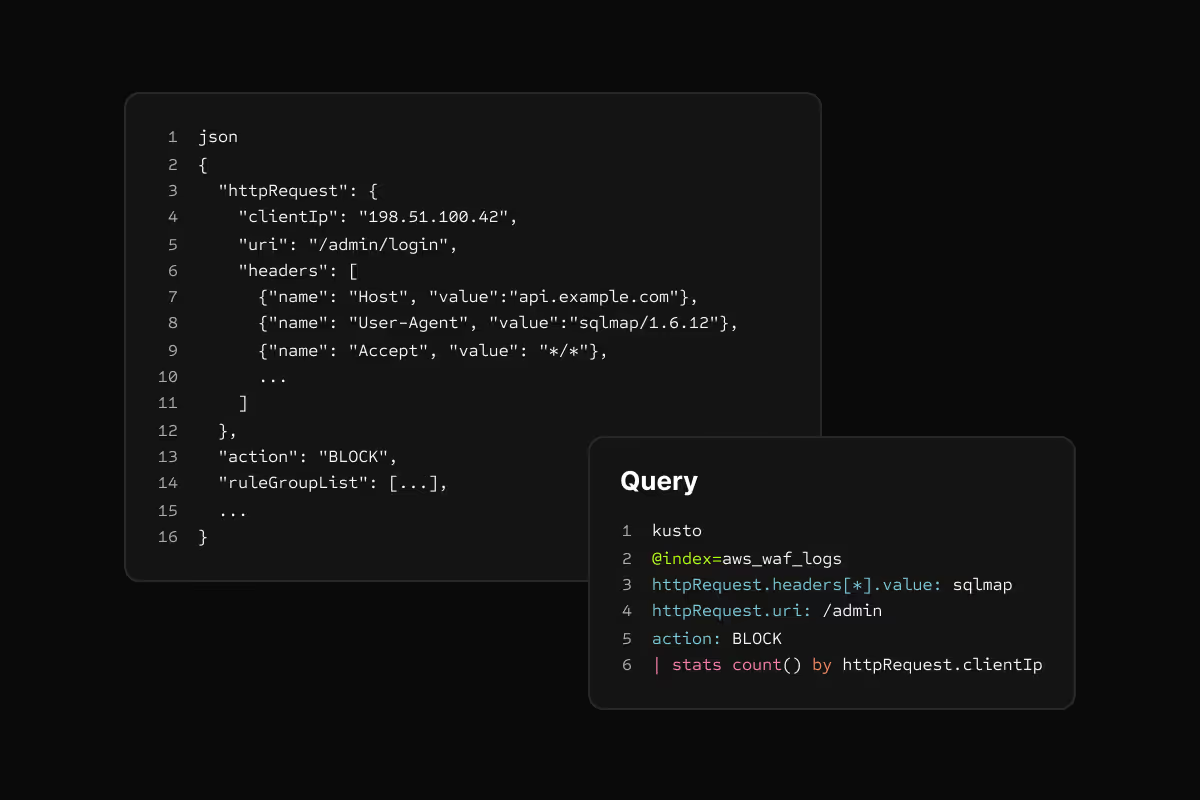

Full-text search

Search for any text in any field. No schema required. Find IPs, usernames, file paths, or error messages across all your logs with one query.

Nested field access

Query deeply nested JSON directly. No JSON extraction functions. Indexes work on nested fields automatically.

Temporal context

"Show me everything from this user in a 10-minute window." Jump from one event to all related activity across log sources. Context is critical for investigations.

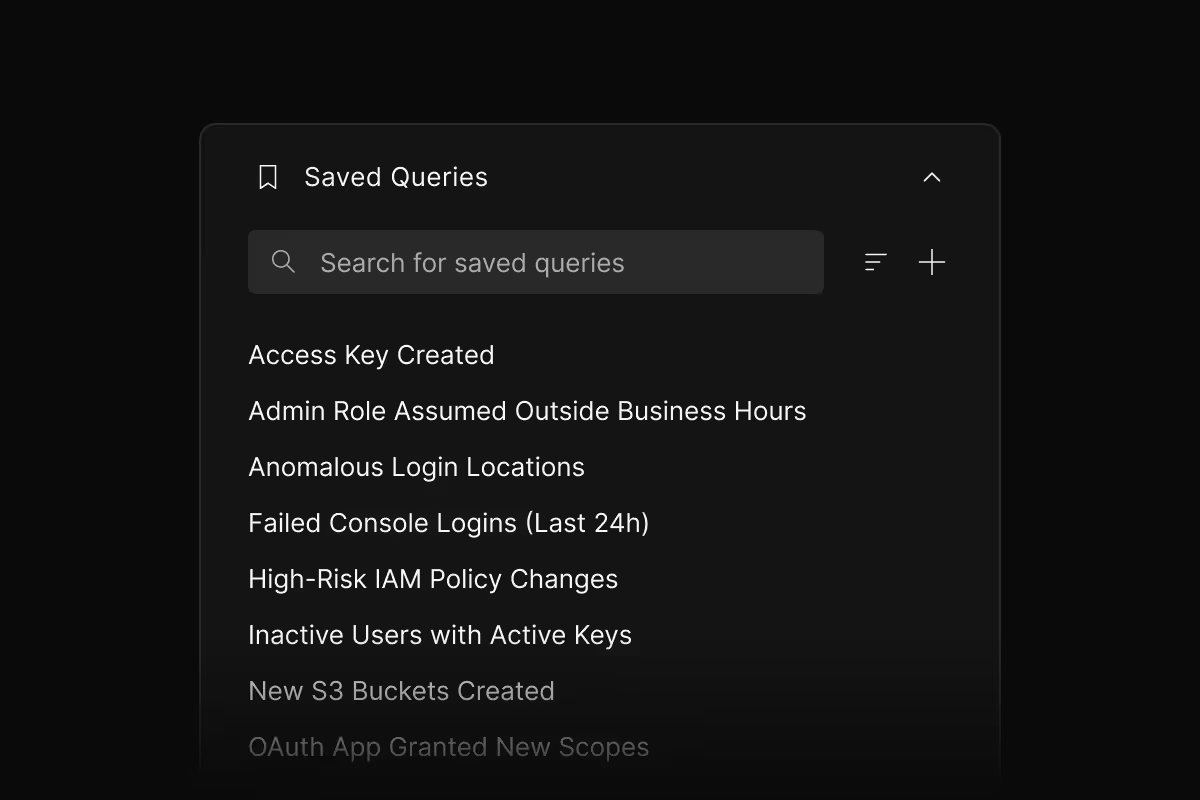

Saved queries

Save complex queries and share with your team. Rerun investigations instantly. Build a library of investigative playbooks that work.

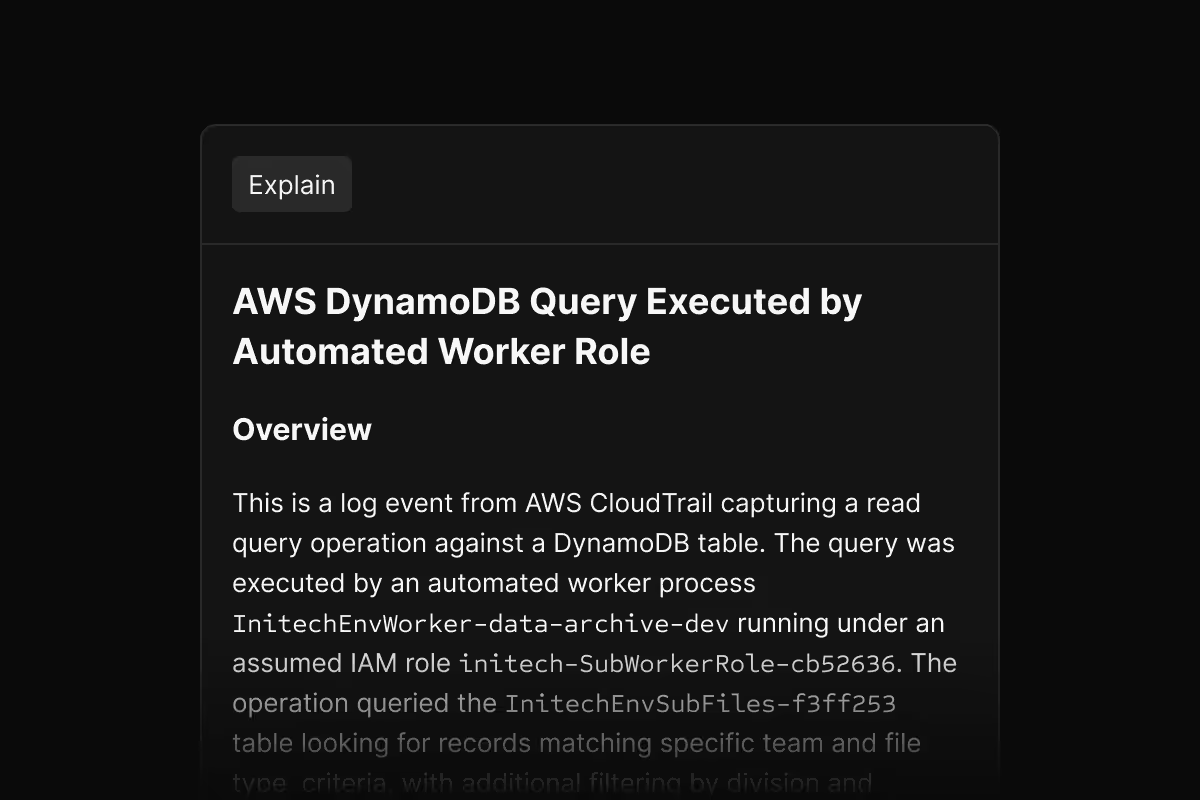

AI explain

Click any log event to get a plain-English explanation. Understand what happened, why it might matter, and what to look for next - without being a log format expert.

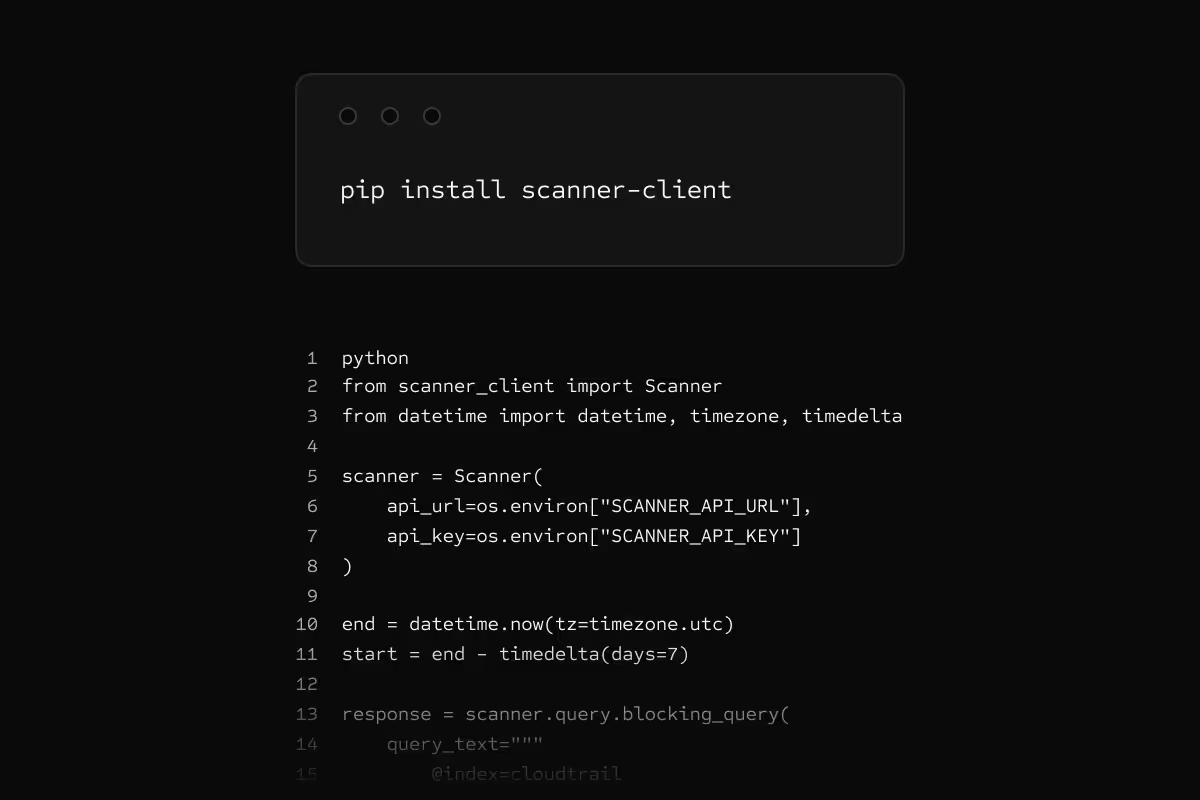

API access

Query programmatically from notebooks, scripts, or automation. Same speed as the UI. Build custom workflows, enrich alerts, or integrate with your tools.

FAQ

Scanner builds inverted indexes during ingestion that map field values to files. When you query, Scanner looks up your search terms in the index to identify which files contain matches, then scans only those files. Traditional tools scan everything. The index tells Scanner exactly where to look, eliminating the need to read files that don't contain matching data.

Index files are approximately 15% the size of your uncompressed logs (~150GB of indexes per 1TB of logs). This is a deliberate trade-off: some additional S3 storage in exchange for 100-1000x faster queries. Index files live in your S3 buckets and can use any storage tier that supports GetObject requests.

Yes. Scanner indexes all your log sources (CloudTrail, Okta, GitHub, etc.) and lets you query across them with one search. Use normalized ECS fields to query consistently, or search source-specific fields when needed. The speed is the same whether you're searching one source or twenty.

As far back as you have indexed data. There are no retention limits or time-based pricing. Customers routinely search across 2-3 years of logs with the same sub-second performance. Long-term retention is practical because S3 storage is cheap and queries stay fast regardless of data volume.

Scanner uses a simple, intuitive query syntax similar to Splunk or Elasticsearch. Example: eventName:PutBucketPolicy sourceIPAddress:"192.168.*" requestParameters.bucketName:prod-*. The syntax supports wildcards, boolean logic, field filtering, and aggregations. There's also a visual query builder for those who prefer point-and-click.

Scanner can index JSON, Parquet, CSV, and plaintext logs. The indexing approach works regardless of the source format. You don't need to convert your logs to a specific format—Scanner handles whatever is in your S3 buckets.

Search your data like it’s 2025

See how Scanner can turn your S3 data lake into a high-performance search engine. Query years of logs in seconds, not hours.